GPU Dedicated Servers Rental

Rent GPU dedicated servers ideal for Deep Learning, NLP, image processing, animation rendering and video transcoding tasks.

Enterprise infrastructure, bare-metal performance and predictable pricing - built for modern GPU workloads.

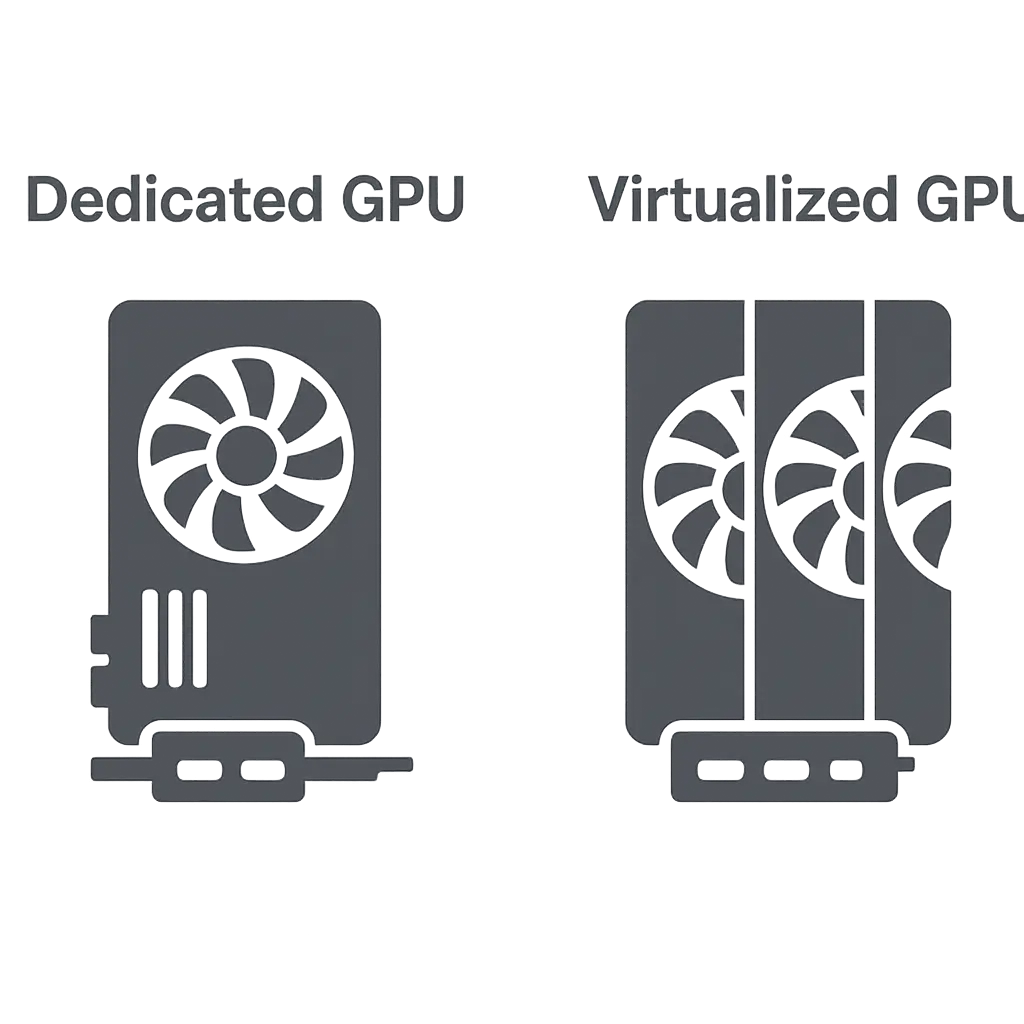

Root access to your GPU server. No noisy neighbors, no virtualization overhead.

Low-latency global routes, IPv4/IPv6, 1-10 Gbps ports, DDoS protection.

Flat monthly rates and clear add-ons for storage, IPs and bandwidth.

Upgrade RAM, storage or add GPUs anytime. Multi-GPU and NVLink support available.

Remote hands, OS reloads and hardware swaps by on-site engineers.

Commit for 3, 6, 12 months or more and save big on your GPU rentals.

When you want to achieve the highest performance possible, virtualization just gets in the way. Cloud GPUs suffer from slow I/O and shared resources. With dedicated GPU servers, all resources are yours - full CUDA/Tensor cores and VRAM, consistent throughput, and root access - at a fraction of AWS/GCP/Azure cost.

Looking for a cloud GPU server as a remote desktop? Rent GPU servers with Windows 10 Pro and connect via Microsoft RDP.

Nvidia GeForce GTX 1080 Ti is based on the Pascal GP102 graphics processor (the same chip used by Tesla P40)

GeForce GTX 1080 Ti GPUs are good for Deep Learning, animation rendering, video transcoding and real time video processing.

11 GB memory (484 GB/s bandwidth)

3584 Nvidia CUDA cores

11.3 TFLOPS (FP32)

Nvidia GeForce RTX 2080 Ti is based on the TU102 graphics processor (the same processor used by Titan RTX). In addition to CUDA cores, RTX 2080 Ti has 544 tensor cores that accelerates machine learning applications. The card also has 68 ray tracing cores which dramatically improves GPU ray tracing performance and it's supported in VRAY, OctaneRender and Redshift.

11 GB memory (616 GB/s bandwidth)

4352 Nvidia CUDA cores

13.5 TFLOPS (FP32)

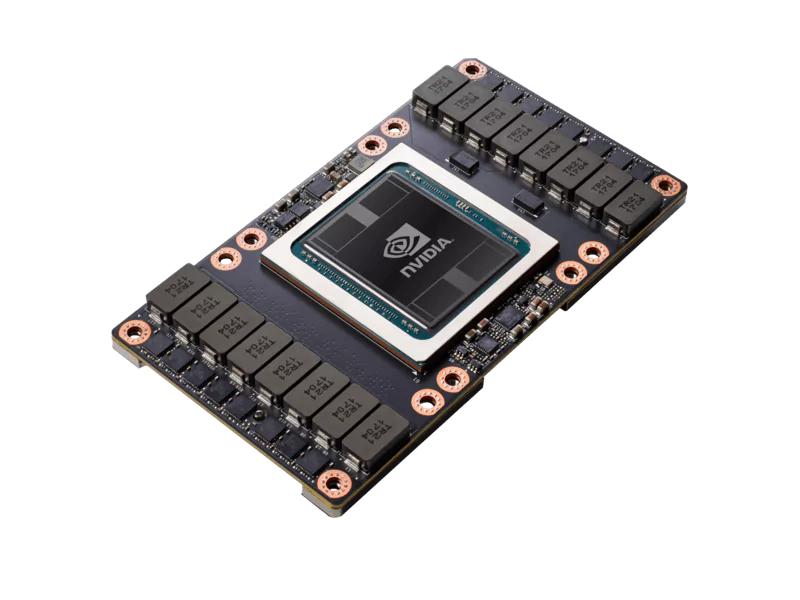

Nvidia Tesla V100 is based on Nvidia Volta architecture and offers the performance of up to 100 CPUs in a single GPU. V100 has two versions, PCle and SXM2, with 14 and 15.7 TFLOPS FP32 performance respectively. It comes with either 16GB or 32GB memory, and 32GB one enables AI teams to fit very large neural network models into the GPU memory.

Nvidia Tesla V100 GPUs are good for Machine Learning, Deep Learning, Natural Language Processing, molecular modeling and genomics. Tesla GPUs are the only choice for applications that require double precision such as physics modelling or engineering simulations.

16 or 32 GB memory (900 GB/s bandwidth)

5120 Nvidia CUDA cores

15.7 TFLOPS (FP32)

NNvidia RTX A4000 is the most powerful single-slot GPU for professionals. Built on the NVIDIA Ampere architecture, the RTX A4000 come with 16 GB of graphics memory with error-correction code (ECC). Our A4000 based servers provides the highest performance/dollar.

16 GB memory (448 GB/s bandwidth)

6144 Nvidia CUDA cores

192 Tensor Cores

19.2 TFLOPS (FP32)

153.4 TFLOPS Tensor Performance

Which GPU Dedicated Server is right for your workload?

| Plan | GPU | Processor | Memory | Storage | Bandwidth | Pricing | |

|---|---|---|---|---|---|---|---|

| A4000 Basic | 1x NVIDIA RTX A4000 | Intel i5-7500 - 4 Cores | 32 GB | 500 GB SSD | Unmetered @ 1 Gbps |

$239.00/mo

|

|

| 1080 Ti Basic | 1x NVIDIA GTX 1080 Ti | Intel Xeon E5-2670 - 8 Cores | 32 GB | 500 GB SSD | Unmetered @ 1 Gbps |

$159.00/mo

|

|

| A4000 Pro | 1x NVIDIA RTX A4000 | AMD Threadripper 1920X - 12 Cores | 128 GB | 2 TB NVMe | Unmetered @ 1 Gbps |

$399.00/mo

|

|

| 2× A4000 Pro | 2x NVIDIA RTX A4000 | AMD Ryzen 7 3700X - 8 Cores | 64 GB | 1 TB NVMe | Unmetered @ 1 Gbps |

$549.00/mo

|

|

| 2080 Ti Perf | 1x NVIDIA RTX 2080 Ti | Intel i7-11700K - 8 Cores | 32 GB | 480 GB SSD | Unmetered @ 1 Gbps |

$209.00/mo

|

|

| 2× 2080 Ti Perf | 2x NVIDIA RTX 2080 Ti | Intel i7-11700K - 8 Cores | 32 GB | 480 GB SSD | Unmetered @ 1 Gbps |

$349.00/mo

|

Didn't find the configuration you were looking for? Contact us. We'll quickly make it just the way you want it.

All disks are SSD/NVMe. Upgrades available: +GPU, +RAM, +NVMe, +SSD, 10-100 Gbps uplink.

Technical highlights of available GPUs.

| GPU | Memory | CUDA Cores | TFLOPS (FP32) |

|---|---|---|---|

| GTX 1080 Ti | 11 GB GDDR5X | 3584 | 11.3 |

| RTX 2080 Ti | 11 GB GDDR6 | 4352 | 13.5 |

| RTX A4000 | 16 GB ECC GDDR6 | 6144 | 19.2 |

| RTX A6000 | 48 GB ECC GDDR6 | 10752 | 38.7 |

| Tesla V100 | 16-32 GB HBM2 | 5120 | 15.7 |

Measured latency and bandwidth from our Turkey datacenter.

| Location | Ping (ms) | Download (Mbps) | Upload (Mbps) |

|---|---|---|---|

| Amsterdam | 69 | 890 | 870 |

| Frankfurt | 60 | 850 | 890 |

| London | 74 | 850 | 875 |

| New York City | 142 | 780 | 590 |

| Boston | 139 | 700-910 | 500-630 |

| San Francisco | 207 | 700-920 | 200-450 |

| Toronto | 147 | 800 | 590 |

Below are the most common questions customers ask before deploying GPU servers on Thoplam.